- The Document Digest by Tensorlake

- Posts

- Turning Document Chaos into Structured Gold

Turning Document Chaos into Structured Gold

Most LLMs choke on real-world documents: PDFs with 100+ pages, spreadsheets packed with edge cases, or forms where the layout is half the meaning. And if you’ve ever tried to extract nested data from these formats, you know that it’s rarely as simple as “just call an API.”

Since launching Document AI, we’ve helped real-world businesses structure the unstructured. We’ve learned:

LLMs struggle with deeply nested fields or schemas with 100+ data points

Accuracy tanks when the critical info is buried on page 86 of a 100-page doc

So we shipped the most powerful structured extraction system yet:

Page Classification + Filtering — Pre-filter irrelevant pages to boost accuracy by 10–15% on large documents

Arrayed Schemas — Define multiple schemas independently in one call, making complex data extraction more accurate without added complexity

Coming soon: Citations for extracted fields, so you’ll know exactly where each value came from.

This edition of the Document Digest dives into how we’re making structured extraction smarter, more scalable, and LLM-native.

Got a gnarly document you’re trying to parse? Book a time and help shape what’s next.

Structured Extraction with Page Classification

To extract structured data from only the relevant pages of a document, use the page_classes option in your structured extraction request. This allows you to reference page classes you previously defined and only run schema extraction on pages that match.

Try this out in this colab notebook.

Example

Define your page classes:

Define your structured data extraction schemas:

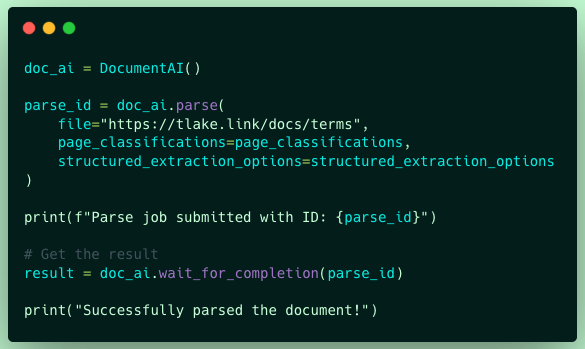

Parse your document

Use the results

In this example, the extractor first classifies each page and only applies your schemas to pages labeled "signature_page" or "terms_and_conditions".

This improves performance and accuracy, especially in documents with 30+ pages, by reducing irrelevant noise for the LLM.

Read the announcement on our blog (colab notebook included) or get started with our docs.

Try our latest integrations

LangChain x Tensorlake

Try our our LangChain Tool next time you build a LangGraph agent

Learn why Tensorlake is the solution to ensuring all relevant data is extracted and ready to integrate within your agentic workflow in our blog post.

Watch a 5-min video tutorial on how to use the LangChain Tool

Learn how to use the Tool with this quick tutorial (colab notebook included)

Qdrant x Tensorlake

Learn how to implement next-level RAG with structured filtering, semantic search, and comprehensive document understanding, all integrated seamlessly in our Qdrant x Tensorlake blog post.

Watch a 10-min video tutorial on how to use Tensorlake, Qdrant, and LangGraph to get RAG, without compromise.

Learn how to use the tool with this quick tutorial (colab notebook included)

Tensorlake TL;DR

Page Classifications

Pre-filter irrelevant pages to boost accuracy by 10–15% on large documents

Define multiple schemas independently in one call, making complex data extraction more accurate without added complexity

API v2 Released

Supports multiple Structured Data Extraction schemas plus Page Classification in the same, single parsing API call.

Enables partition-by-page, section, or fragment extraction to handle multi-section documents cleanly.

Check out the full API reference in our docs

Make sure you’re running the latest version of the Python SDK

Major Model Improvements

Improved checkbox detection, complex table parsing, handwriting, strikethrough, signatures, and mixed media content accuracy.

Expanded Format Support

Tensorlake DocAI now robustly handles: spreadsheets, CSV, presentations, Word, PDF, raw text, and images.

Why does this matter for developers

Cleaner inputs for RAG, agent pipelines, and VectorDBs/ETL, enabling pipelines to act on trustworthy structured fields and layouts, not just raw blobs.

Single API call now handles extraction + classification + layout + markdown chunking. No brittle multi-step orchestration.

Multi-format support saves devs from custom ingestion hacks for Excel, scans, and hybrid docs.

Until next time, happy parsing, extracting, and powering smarter agents with cleaner data.

—Sarah and the Tensorlake Team

The Tensorlake TL;DR Table

What We Launched | How It Helps Devs |

|---|---|

More features, improved developer experience | |

Pre-filter irrelevant pages to boost accuracy by 10–15% on large documents | |

Faster agent embedding pipelines & docs parsing | |

Structured parse → Vector search in one flow | |

Model upgrades | Better detection of checkboxes, tables, handwriting |

Expanded format support | Reliable ingestion across all common document types |